Mixed Reality (MR) is the foundational technology behind Spatial Computing. It is the technology that allows digital content to understand and interact with your physical environment in real time. As a form of immersive technology, Mixed Reality combines real and virtual environments, enabling users to interact with both seamlessly and providing immersive sensory experiences.

While Augmented Reality (AR) enables overlaying digital information on the physical world, and Virtual Reality (VR) creates fully immersive digital environments, Mixed Reality combines elements of both by enabling virtual objects to occlude behind real objects, cast shadows on real surfaces, and respond to real-world physics.

This guide provides a comprehensive technical breakdown of MR technology for enterprise decision-makers evaluating spatial computing solutions. We cover the underlying hardware architectures, key metrics that determine user experience quality, enterprise use cases with documented ROI, and the strategic roadmaps of major platform vendors through 2027.

Feel free to read along or jump to the section that sparks your interest:

TL;DR

What is Mixed Reality? MR is a core component of Spatial Computing that enables digital content to understand and interact with physical space in real time.

Current Use Cases: Enterprise training (up to 90% cost reduction vs classroom methods), surgical planning, industrial maintenance with remote expert overlay, and design visualization.

Key Challenges: Current hardware limitations include battery life (typically 3-4 hours), device weight, and achieving a comfortable motion-to-photon latency.

Market Opportunity: The global AR/VR/MR/XR market is projected to reach $200.87 billion by 2030, growing at a 22.0% CAGR (Compound Annual Growth Rate) from $59.76B in 2024, with healthcare emerging as one of the leading adoption sectors.

What is Mixed Reality?

Mixed Reality merges the real and virtual worlds to create new environments where physical and digital objects coexist and interact in real time. MR is a form of spatial computing that combines elements of both VR and AR, enabling physical and digital objects to coexist and interact in real time within the same environment. Rather than simply overlaying digital content onto the real world (as AR does) or fully immersing users in a virtual environment (as VR does), MR creates experiences where virtual content is aware of, anchored to, and responsive to the physical world. MR systems continuously scan, map, and understand the geometry of a physical environment, allowing virtual content to behave as if it truly exists within that space.

This spatial understanding depends on a device's ability to track its position, map surrounding surfaces, and recognize objects. Two core capabilities distinguish true MR from simpler augmented displays:

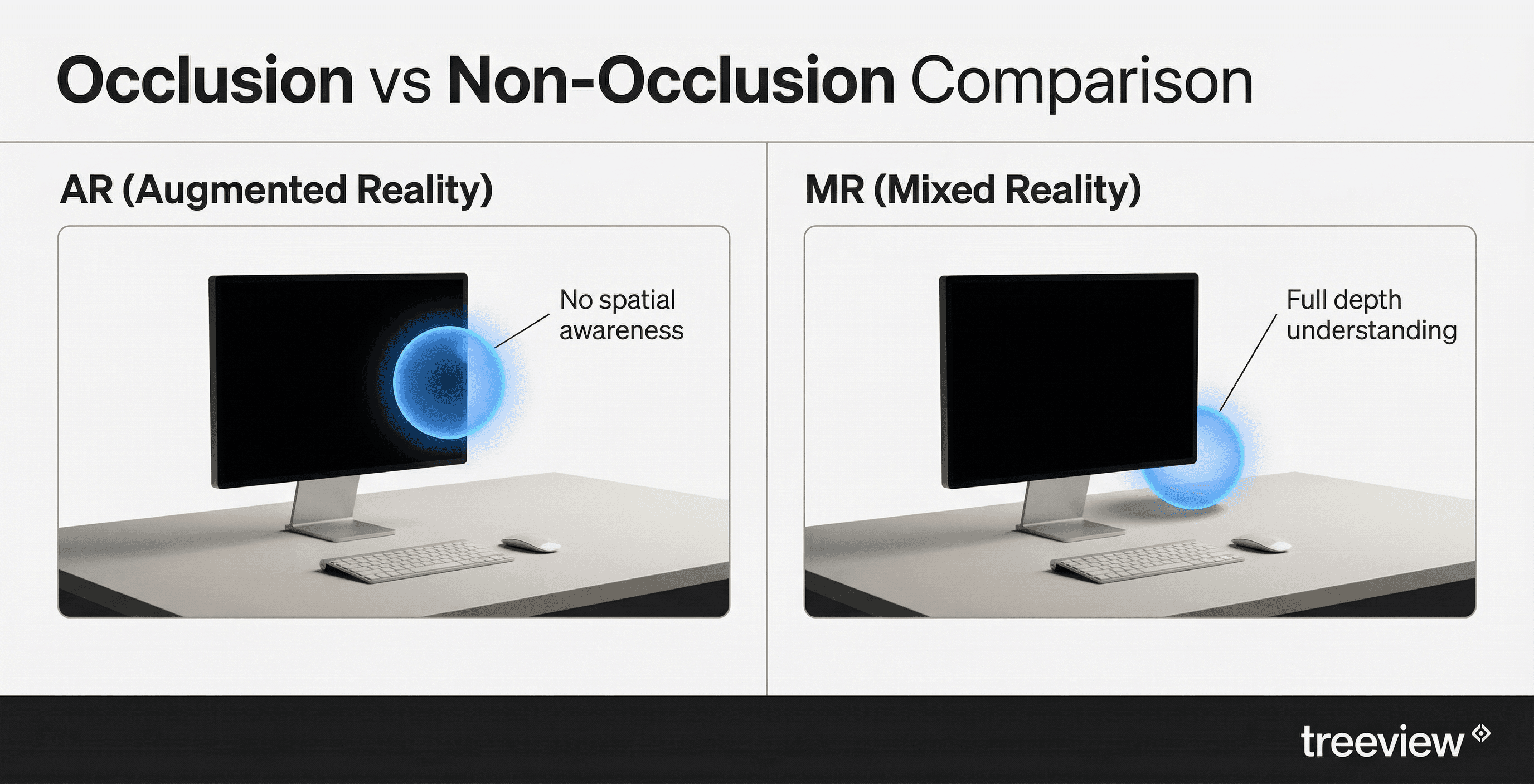

Occlusion: This is the visual effect that makes virtual objects appear hidden behind real-world objects, adding a layer of realism.

Anchoring: This pins virtual objects to specific locations in the physical environment, so they remain fixed in place as you move around them.

For example, in AR, a virtual ball on your desk would just float at a fixed screen position. In MR, that same ball understands the desk surface, rolls when you tilt your head, bounces off real walls, and disappears behind your monitor. This spatial understanding is what makes MR a more advanced technology, enabling more complex, high value use cases.

The term Spatial Computing emerged as the umbrella category covering AR, VR, and MR with the release of Apple Vision Pro in 2024. Apple avoided existing industry terminology and positioned Vision Pro within a niche term they controlled, Spatial Computing. This category term is now being adopted industry-wide as it better captures the convergence happening in hardware: most modern headsets can operate across the full reality spectrum, from pure VR to full MR passthrough.

Mixed Reality vs Virtual Reality vs Augmented Reality

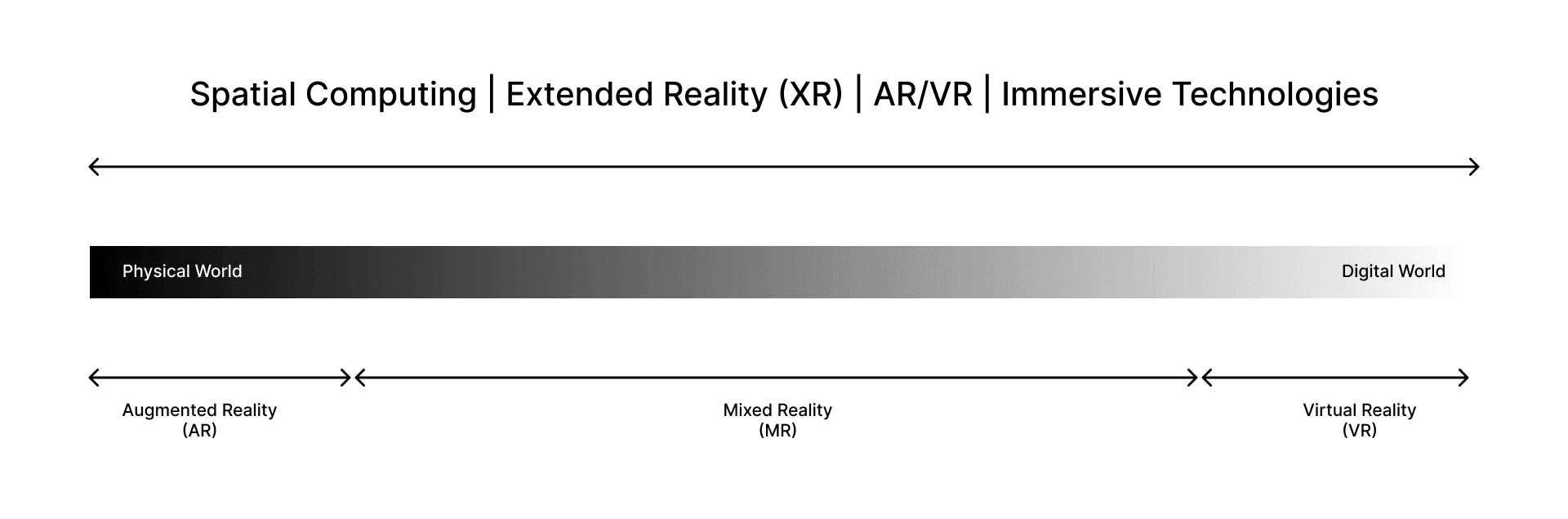

Extended Reality (XR) serves as the overarching umbrella term for the complete spectrum of computer-altered reality. The "X" acts as a variable, representing any current or future spatial technology. It functions as the collective wrapper for the three primary modalities: Augmented Reality (AR), Virtual Reality (VR), and Mixed Reality (MR), unifying them into a continuous spectrum rather than treating them as separate silos.

While often used interchangeably with Spatial Computing, the two terms describe distinct aspects of the ecosystem: XR refers to the medium (the specific level of immersion), whereas Spatial Computing refers to the interaction model (the computer's ability to perceive and understand three-dimensional space).

Mastering the distinction between MR, VR, and AR is critical for engineering leaders, as it directly influences hardware selection, platform architecture, and the feasibility of enterprise use cases.

Capability | Virtual Reality (VR) | Augmented Reality (AR) | Mixed Reality (MR) |

|---|---|---|---|

Environment | Fully virtual; the real world is blocked out. | Real world with a digital overlay. | Real and virtual worlds interact spatially. |

Spatial Awareness | Room-scale tracking only. | Basic surface detection. | Full 3D mesh and semantic understanding. |

Occlusion | Not applicable. | Limited or none. | Full occlusion (virtual objects hide behind real ones). |

Interaction Model | Controllers, hand tracking in a virtual space, eyes, and voice. | Touch screen and gaze. | Hands, eyes, voice, and the physical environment as active inputs for interaction. |

Example Devices | HTC Vive Pro 2, Valve Index, Meta Quest 2, Sony Playstation VR | Mobile AR (ARKit/ARCore), Google Glass | Apple Vision Pro, Meta Quest 3, Microsoft HoloLens 2, Magic Leap 2, Samsung Galaxy XR, Meta Orion |

How Mixed Reality Technology Works

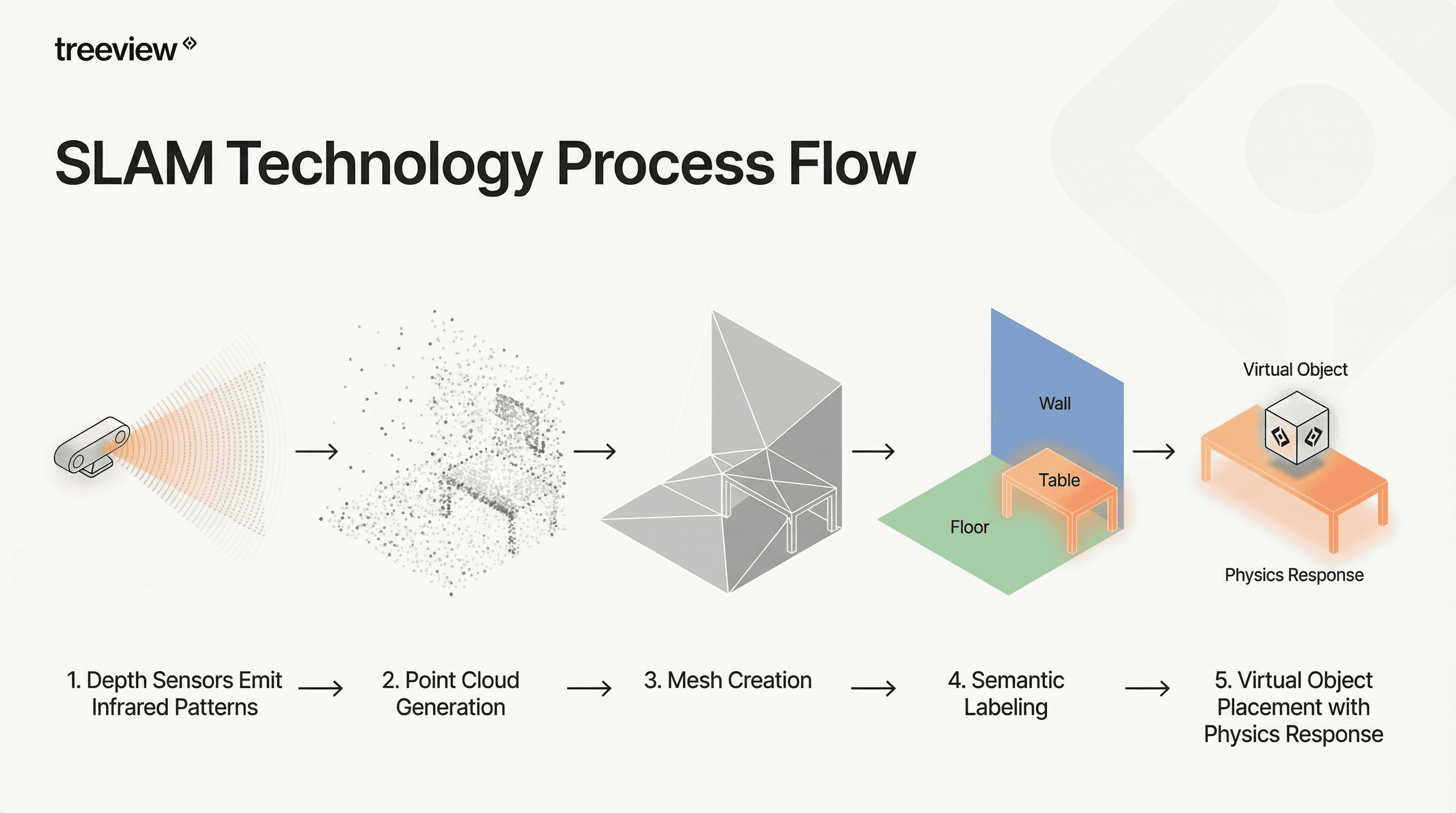

MR devices build a real-time 3D map of an environment using a process called Simultaneous Localization and Mapping (SLAM). The device's sensors continuously scan the surroundings while tracking the user's position within that space. This enables precise spatial awareness and interaction, allowing the headset to know not just where virtual objects should appear, but how they should behave as you move. Accurate tracking of the user's head position and orientation is essential for a seamless overlay of digital content in the real world.

The core technologies that power this process include:

Depth Sensors and LiDAR: These measure the distance to surfaces and objects, creating a precise 3D point cloud of the environment.

RGB Cameras: These capture visual information for texture mapping and object recognition.

Scene Understanding Algorithms: With advanced Computer Vision and Artificial Intelligence (AI), these algorithms process sensor data to identify not just geometry but also semantic meaning, such as recognizing a flat surface as a "table" or a vertical plane as a "wall."

Spatial Audio: Premium MR devices incorporate spatial audio to provide immersive sound that is accurately attached to digital objects or the environment.

Speech Input: Many MR environments support speech input, allowing users to control virtual elements and interact with the system using voice commands.

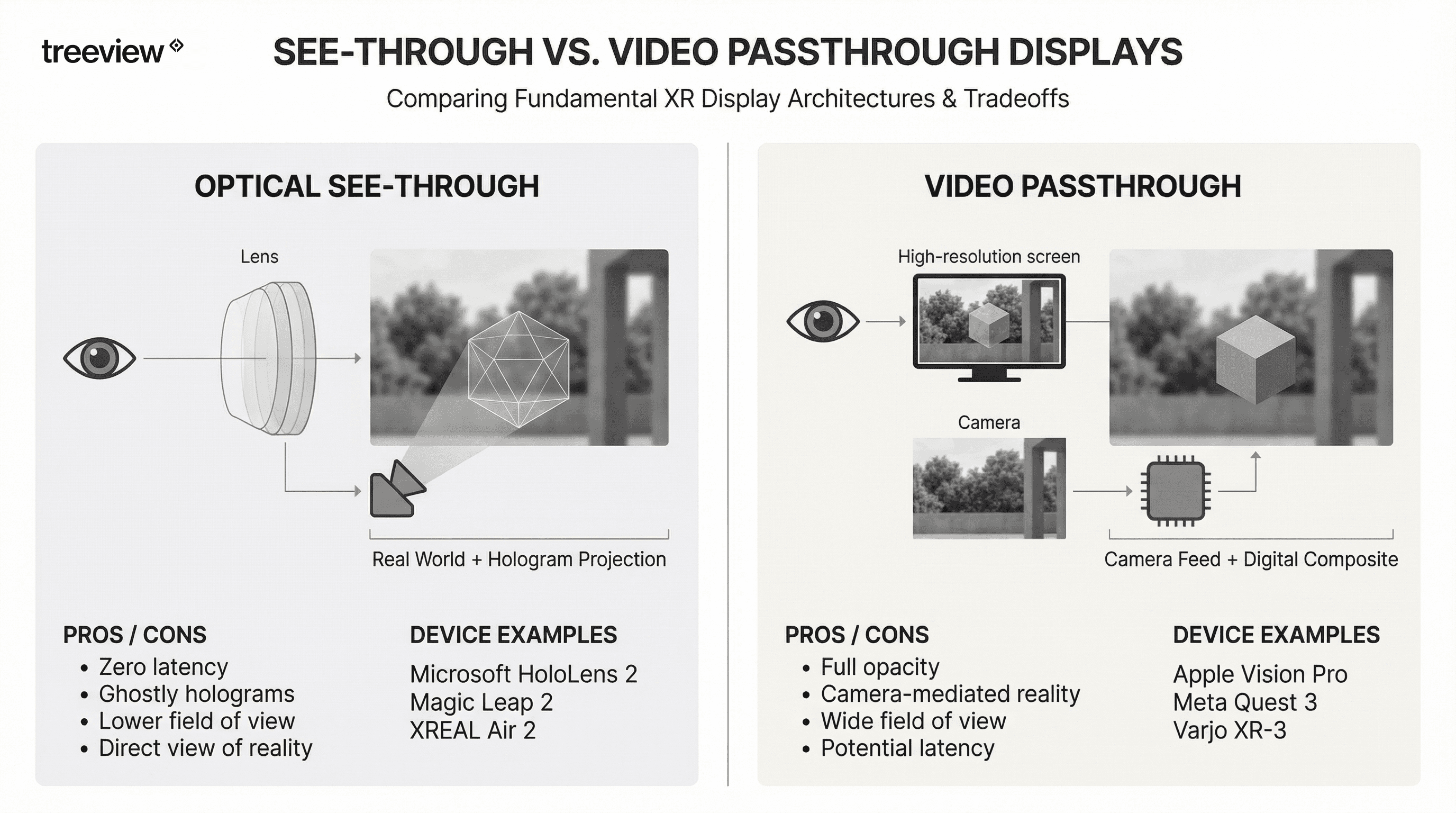

Optical See-Through vs. Video Passthrough Displays

How a user sees the real world through an MR headset fundamentally shapes the experience. Two primary approaches dominate the market today.

Optical See-Through (OST): Devices like the Microsoft HoloLens 2, Magic Leap 2 and Meta Orion use transparent lenses to project holographic images directly into your line of sight. You see the real world with your own eyes, with digital content layered on top. The main advantage is natural vision with zero latency. The limitation is that holograms can appear translucent or "ghostly."

Video Passthrough (VST): Devices like the Apple Vision Pro, Samsung Galaxy XR and Meta Quest 3 use cameras to capture the real world and display it on screens inside the headset. Virtual objects are then digitally composited into this video feed, allowing them to appear fully solid and opaque. The tradeoff is that your perception of reality is mediated by cameras, which can introduce latency or color shifts.

Motion-to-Photon Latency

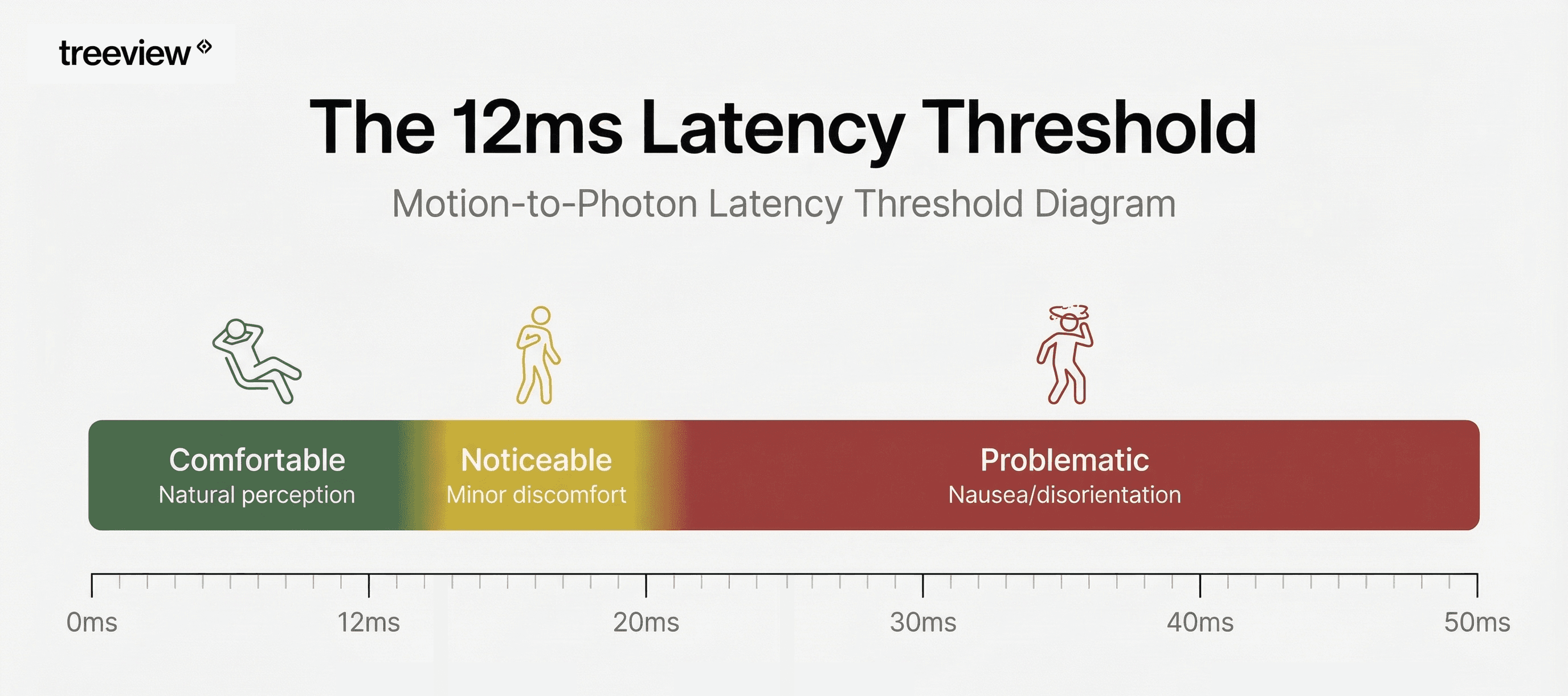

To achieve a high-quality experience comfort depends heavily on motion-to-photon latency, which is the delay between your head movement and the display updating to match. In video passthrough systems, this matters critically: if the world you see lags behind your actual motion, the mismatch triggers nausea and disorientation.

Passthrough latency directly impacts user comfort in extended sessions. Apple's Vision Pro sets the benchmark at approximately 12 milliseconds, while mainstream devices like Meta Quest 3 operate in the 35-40ms range. For enterprise deployments, the practical question isn't whether a device meets an ideal threshold, it's whether the latency is acceptable for the specific use case and session duration. Training applications with 15-30 minute sessions have different tolerance requirements than all-day productivity workflows. This is why deployment planning must account for real-world comfort testing, not just spec-sheet comparisons.

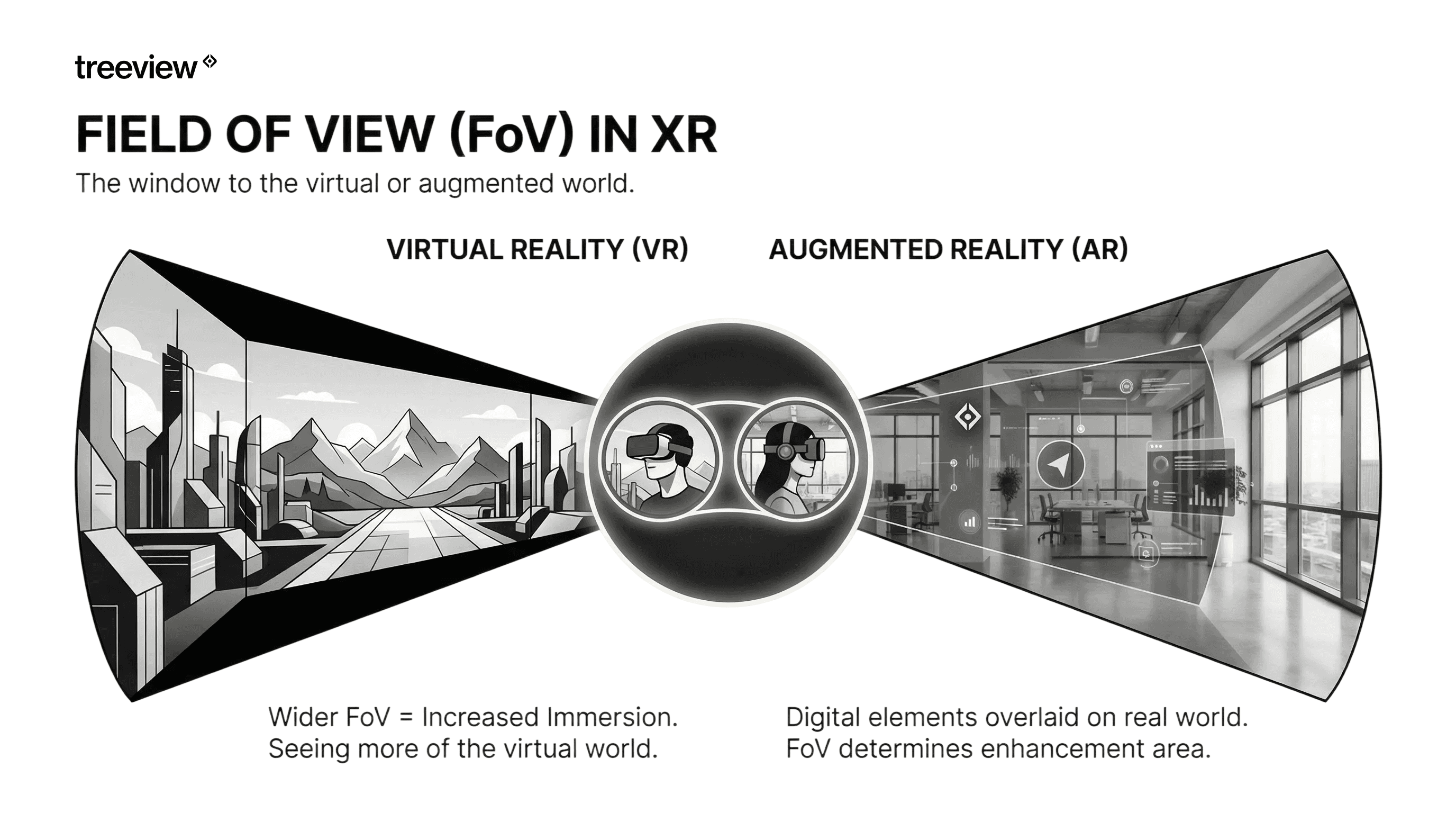

Beyond latency, buyers must evaluate Field of View (FoV). While the human eye sees approx 200 degrees horizontally, most headsets offer between 90° and 110°. A wider FoV increases immersion but often requires heavier optics, a trade-off critical for all-day wearability.

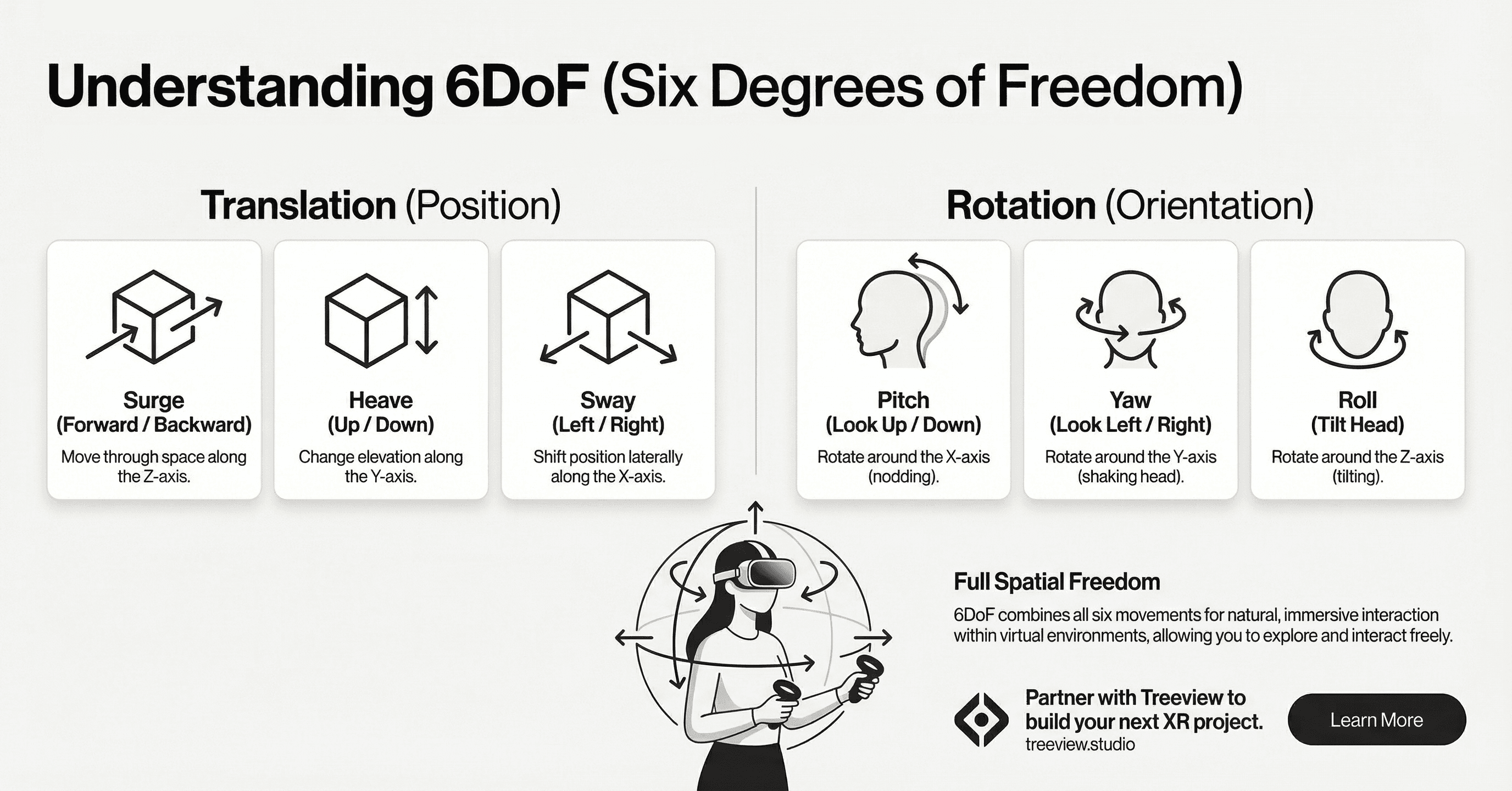

6DoF (Six Degrees of Freedom)

A critical distinction in this spectrum is 6DoF (Six Degrees of Freedom) versus 3DoF. While older VR allowed you to only look around (3DoF), modern Spatial Computing utilizes 6DoF tracking. This means the device tracks your position in X, Y, and Z axes, allowing you to physically walk through a model, crouch to inspect details, and lean around corners, which are movements essential for enterprise inspection workflows.

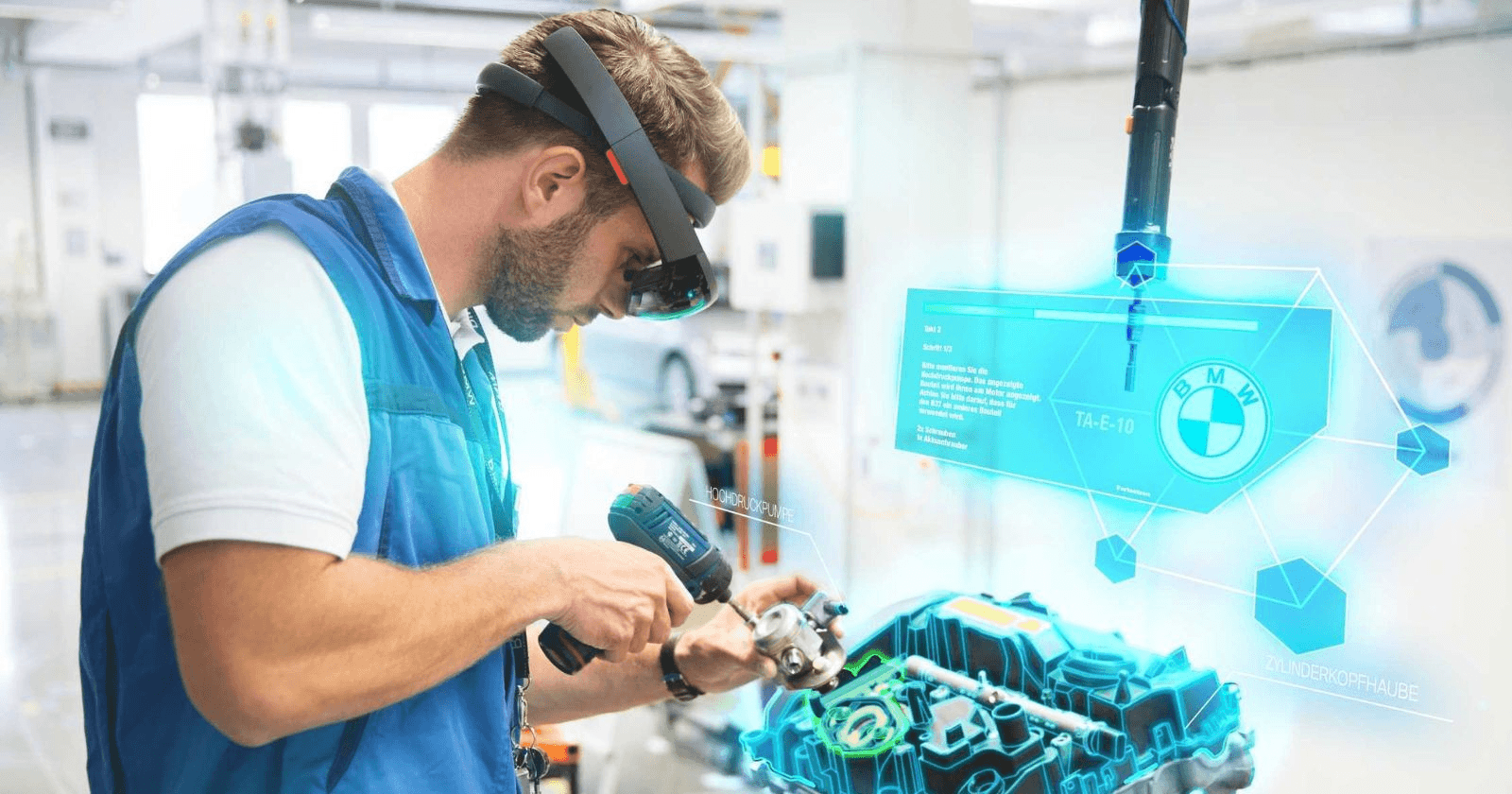

The practical implication is clear: if a field technician needs to see digital work instructions overlaid on a machine, with notes that stay anchored to specific parts as they move, they need MR technology. Simple AR would just show instructions floating in their view, moving with their gaze instead of staying fixed to the equipment.

Mixed Reality Hardware in 2025

The enterprise mixed reality hardware industry has consolidated around a small number of platform-defining players, led by Meta, Apple, and Google, each shaping the market with distinct device strategies, ecosystems, and price tiers. As a result, current enterprise MR deployments tend to center on a handful of flagship devices each optimized for different use cases, technical requirements, and budget constraints.

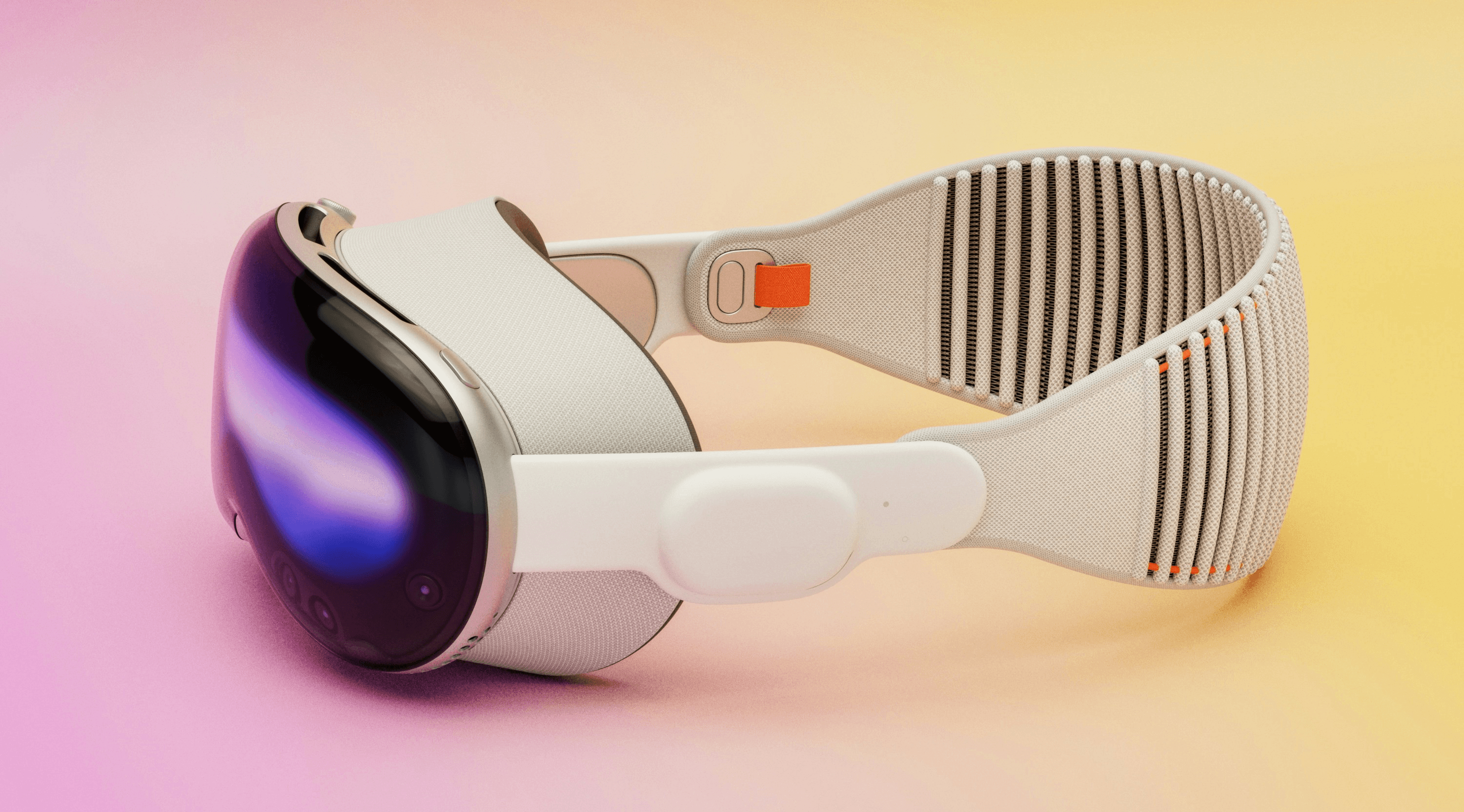

Apple Vision Pro

Price: $3,499 | Architecture: Video Passthrough | Display: 23 million pixels total (micro-OLED)

Vision Pro sets the benchmark for display quality and passthrough latency. The M5 chip handles application processing while the R1 chip is dedicated entirely to sensor fusion and display, achieving the industry-leading ~12ms passthrough latency. The device integrates seamlessly with the Apple ecosystem, making it attractive for organizations already using Mac and iPad infrastructure.

Best for: Design visualization, executive presentation environments, knowledge worker productivity applications requiring text legibility.

Microsoft HoloLens 2

Price: $3,500 (standard) / $4,500 (Industrial Edition) | Architecture: Optical See-Through | Display: 2K 3:2 per eye

HoloLens 2 remains the established enterprise standard due to its integration with Microsoft Azure, Dynamics 365, and existing enterprise software stacks. The optical see-through design provides zero real-world latency, making it suitable for applications where uninterrupted situational awareness is critical. The Industrial Edition meets IP50 requirements for dusty environments and is rated for hazardous locations.

Best for: Remote assist, guided procedures in industrial and healthcare settings, applications requiring safety certification, organizations invested in Microsoft ecosystem.

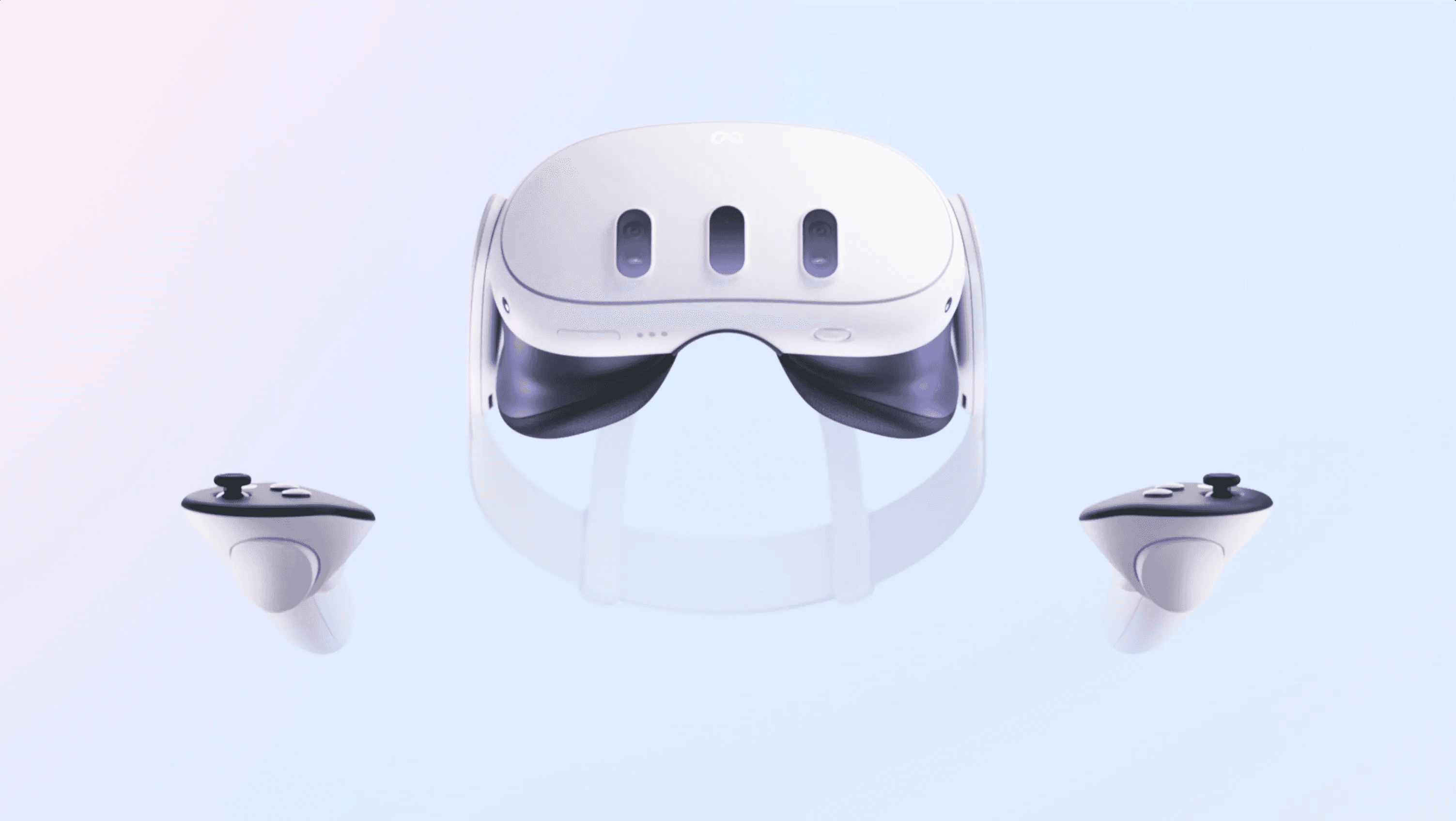

Meta Quest 3 / Quest 3S

Price: $499 (Quest 3) / $299 (Quest 3S) | Architecture: Video Passthrough | Display: 2064 x 2208 per eye

While Meta held a 74.6% share throughout 2024, its market share shifted to 50.8% in Q1 2025 as the market became more competitive, with XREAL capturing a 12.1% share in the same quarter. The Snapdragon XR2 Gen 2 processor provides excellent performance at a fraction of the cost of premium devices. Meta for Business subscription enables centralized device management, custom app deployment, and shared device modes.

Best for: Scalable training deployments, organizations requiring large device fleets, VR/MR hybrid use cases, budget-conscious implementations.

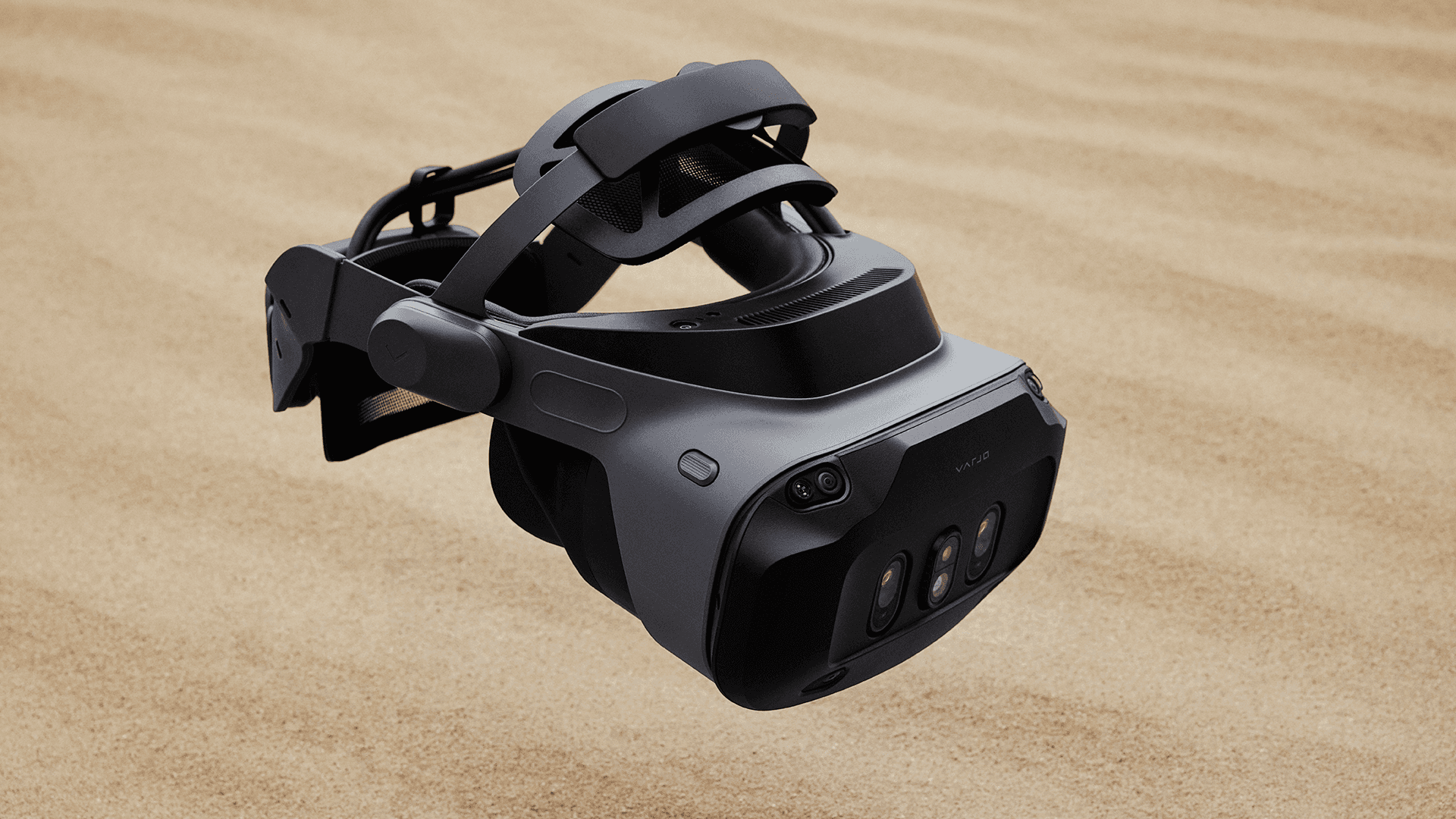

Varjo XR-4

Price: $3,990 | Architecture: Video Passthrough | Display: 51 PPD (human-eye resolution in focus area)

Varjo targets professional simulation and visualization markets where visual fidelity is paramount. The bionic display uses foveated rendering with eye tracking to achieve human-eye resolution in the gaze area. This enables reading small text and seeing fine detail that other headsets cannot reproduce.

Best for: Flight simulation, military, automotive design review, surgical training where visual precision is non-negotiable.

Samsung Galaxy XR

Price: $1,799 | Architecture: Video Passthrough | Display: 29 million pixels total (micro-OLED, 4,032 ppi)

Both displays combined feature 29 million pixels, which is more than the 23 million pixels offered by Apple's Vision Pro, while the device weighs 545 grams, around 50-100 grams lighter than Apple's headset. Like Vision Pro, Galaxy XR uses a tethered battery pack rated for approximately two hours of general use. The Galaxy XR works within Samsung Knox Manage and Android Enterprise, so admins can handle remote setup, encryption, and monitoring just as they do with phones and tablets, while integration with the broader Galaxy ecosystem lets users answer smartphone calls in XR and connect to Windows PCs.

Organizations already invested in the Android and Google ecosystem, enterprise teams prioritizing AI-driven spatial experiences, and deployments that benefit from multimodal interaction powered by Gemini, combining vision, voice, gesture, and contextual understanding directly within immersive environments.

Mixed Reality Hardware: Comparison Guide

Apple Vision Pro | Microsoft HoloLens 2 | Meta Quest 3 | Meta Quest 3S | Varjo XR-4 | Samsung Galaxy XR | |

|---|---|---|---|---|---|---|

Price | $3,499 | $3,500 (Industrial: $4,500) | $499 | $299 | $3,990 | $1,799 |

Architecture | Video Passthrough | Optical See-Through | Video Passthrough | Video Passthrough | Video Passthrough | Video Passthrough |

Display | 23M pixels (micro-OLED) | 2K 3:2 per eye | 2064 x 2208 per eye | 1832 x 1920 per eye | 51 PPD (human-eye resolution) | 29M pixels (micro-OLED) |

Processor | Apple M2 + R1 | Qualcomm Snapdragon 850 | Snapdragon XR2 Gen 2 | Snapdragon XR2 Gen 2 | Custom + PC tethered | Snapdragon XR2+ Gen 2 |

Passthrough Latency | ~12ms | N/A (optical) | ~25ms | ~25ms | ~12ms | Not disclosed |

Field of View | 100° | 52° | 110° | 96° | 120° | 109° |

Weight | 600-650g | 566g | 515g | 512g | 670g | 545g |

Battery Life | 2-2.5 hours | 2-3 hours | 2.2 hours | 2.5 hours | External power | 2-2.5 hours |

Standalone | Yes | Yes | Yes | Yes | No (PC required) | Yes |

Enterprise Management | Apple MDM | Azure, Intune, Dynamics 365 | Meta for Business | Meta for Business | Varjo Reality Cloud | Knox Manage, Android Enterprise |

Best For | Design visualization, text-heavy productivity | Industrial procedures, Microsoft ecosystem | Scalable training deployments | Budget fleet rollouts | Flight simulation, surgical training | Android/Google ecosystem, mid-tier enterprise |

Current MR devices are expanding their input systems toward Hand Tracking and Eye Tracking. This 'Natural User Interface' allows workers to manipulate digital machinery using their bare hands, keeping them free to use physical tools simultaneously.

While visual fidelity is high for current MR hardware, the next frontier is Haptics. Current systems rely on visual and audio cues, but emerging accessories are introducing haptic feedback gloves that allow users to 'feel' the resistance of a virtual valve or the texture of a digital surface.

AI is increasingly becoming a core input system for mixed reality applications. Modern MR experiences no longer rely solely on user-driven inputs like hands, eyes, or voice. AI systems can now interpret both in-app content and the user’s physical environment simultaneously. With spatial awareness, sensor data, and multimodal models working together, AI gains real-time context about what the user is seeing, doing, and interacting with, enabling more adaptive, anticipatory, and intelligent MR experiences.

Mixed Reality Applications Across Industries

The enterprise adoption of MR is driven by measurable business outcomes and a clear return on investment. MR has transitioned from experimental use to a practical tool across several major industries, improving efficiency and safety. Mixed Reality technologies also have promising applications that are beginning to see adoption in healthcare, architecture, and education. Here are some of the most impactful use cases.

Manufacturing & Logistics

Manufacturing demonstrates strong XR adoption with 75% of industrial companies implementing large-scale VR/AR reporting 10% operational improvements.

Virtual Factory Planning enables manufacturers to simulate entire production lines before physical construction begins. BMW Group uses NVIDIA Omniverse to create digital twins spanning over one million square meters across 31 factories worldwide. Factory planners test design changes in simulation before committing to real-world construction, achieving 30% more efficient planning processes and catching costly errors before they're built into concrete and steel.

Guided Assembly overlays digital work instructions directly onto components being assembled. Boeing implemented AR glasses for wire harness assembly, a process requiring technicians to navigate 130 miles of wiring per aircraft. Rather than cross-referencing paper diagrams, technicians now see step-by-step guidance anchored to actual components while keeping both hands free. The result: error rates dropped to zero and wiring production time fell by 25%.

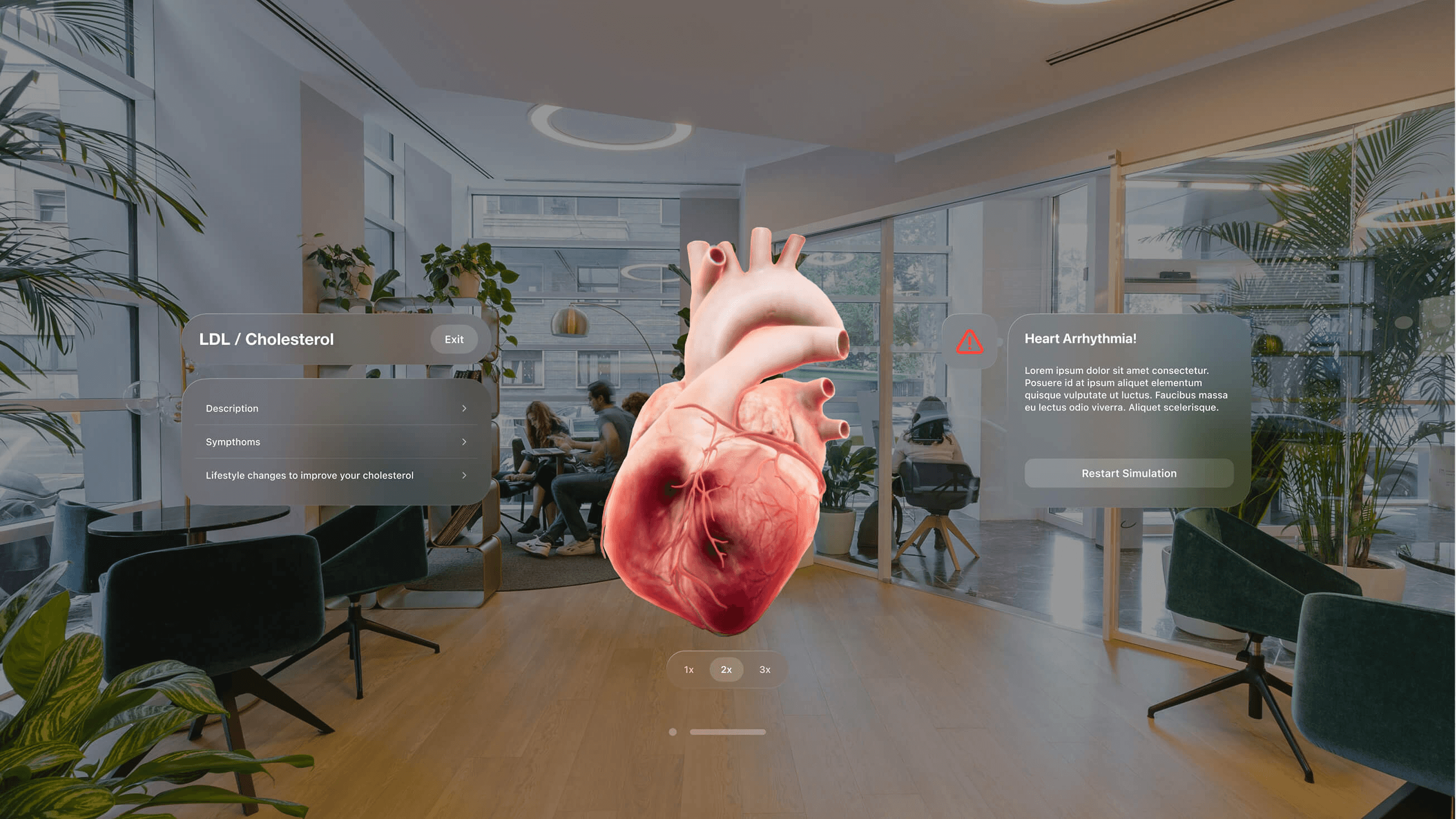

Healthcare & Life Sciences

Healthcare holds the largest market share in enterprise adoption, with 40% of healthcare providers using VR for patient treatment and staff training. The market is projected to grow from $610M (2018) to $4.2B (2026).

Patient Education converts static medical data into immersive, understandable narratives, directly addressing health literacy gaps. By visualizing invisible risk factors, such as cholesterol accumulation or hemodynamic changes, patients can directly observe the cause-and-effect relationship of their lifestyle choices. The CardioCompass platform leverages spatial computing and WebGL to model cardiovascular conditions, bridging the gap between clinical diagnosis and patient comprehension through interactive risk factor simulation.

Pre-operative Planning transforms CT and MRI scans into patient-specific 3D holograms. Surgeons rehearse complex procedures on Digital Twins of actual patient anatomy, identifying challenges before making the first incision. For liver resections, MR-guided planning improved understanding of vascular anatomy and tumor location, enabling more accurate surgery while preserving larger residual liver volume.

Medical Training enables unlimited repetition of procedures without patient risk. When Imperial College NHS Trust tested MR simulation for trauma preparedness, attendees achieved and sustained higher clinical knowledge scores and were more likely to receive positive supervisor appraisals, proving that simulated practice translates to real-world competence.

Automotive & Aerospace

Aerospace and automotive manufacturers face dizzying complexity and stakes where errors cost millions.

Design Reviews at 1:1 scale allow engineering teams to evaluate vehicles as full-size holograms rather than clay models or flat renders. Mixed reality can shorten design reviews from days to hours, as physical mockups are replaced with life-size digital objects editable in real time. Teams collaborate from anywhere in the world, and companies save tens of thousands of dollars per iteration while enabling more design cycles before production.

Pilot Training provides realistic procedure practice without aircraft downtime. Traditional flight simulators cost thousands per hour; an actual fighter jet costs over $40,000 per hour to operate. American Airlines uses VR for cabin crew certification, and the results speak for themselves: first-trial passing scores on door arming procedures improved from 73% to 97%.

Architecture, Engineering & Construction

AEC firms use MR to catch costly errors before they become permanent.

On-Site Visualization overlays BIM data directly onto construction sites. Project managers wearing MR headsets see where pipes, conduits, and structural elements should be installed, comparing digital plans against physical progress in real time. Clashes between systems (a pipe routed through where a beam should go) become visible before concrete is poured rather than after, when fixes require expensive rework.

Remote Collaboration enables architects, engineers, and clients to walk through full-scale holographic building models together regardless of location. Design changes visualize instantly, accelerating approval cycles and reducing the miscommunication that derails projects.

Retail & E-commerce

Retailers use MR to overcome the visualization gap that causes purchase hesitation.

Virtual Try-On with proper occlusion lets customers place furniture in their actual living rooms with accurate scale and lighting. Unlike simple AR, MR shows the sofa correctly disappearing behind an existing coffee table, maintaining spatial realism that builds confidence for major purchases. Lowe's identified that 32% of home improvement projects are abandoned before starting, representing $70 billion in unrealized sales. AR visualization helps customers see exactly how projects will look, reducing both hesitation and returns.

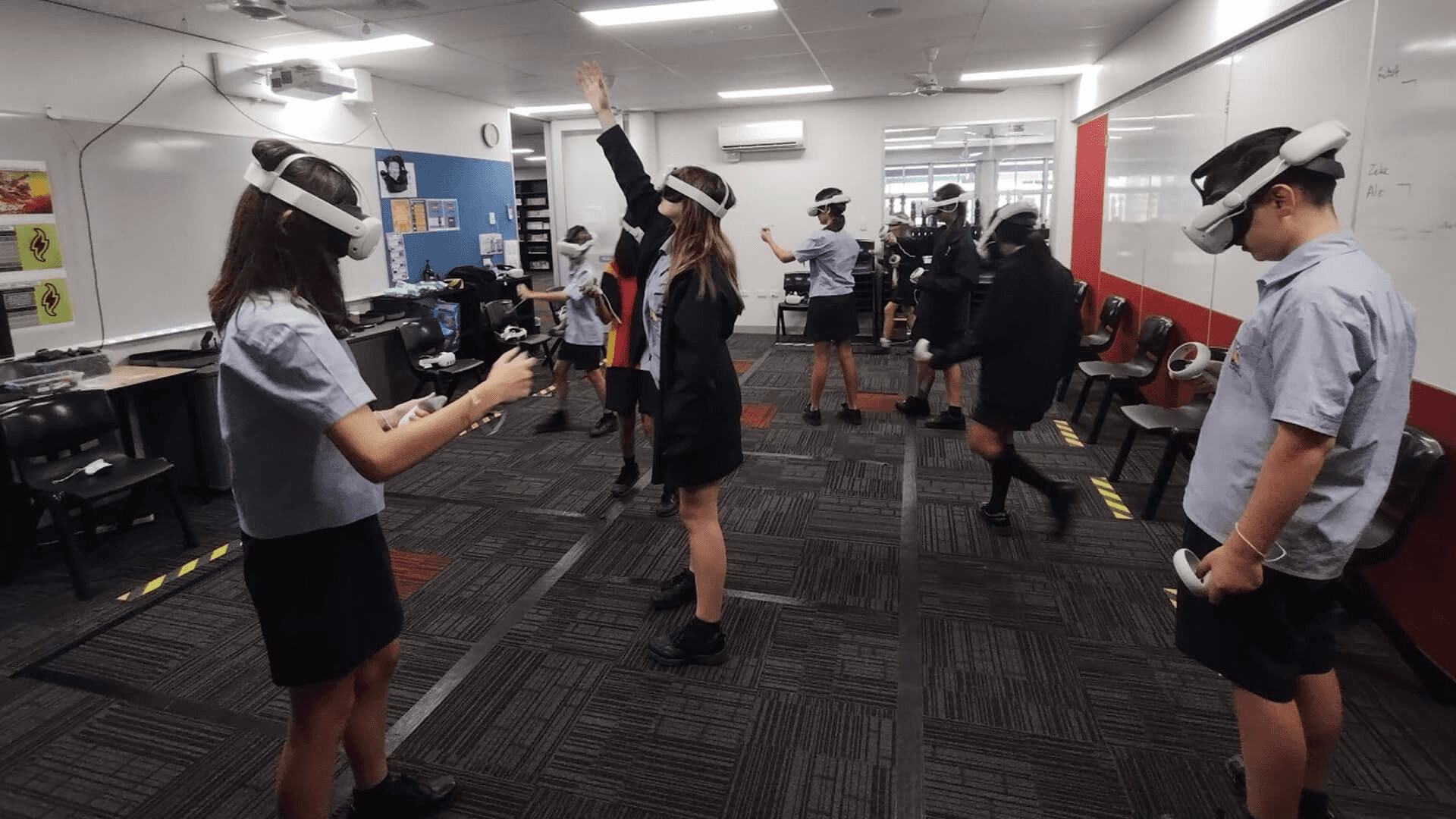

Education

Educational institutions use MR to make abstract concepts tangible and dangerous experiments safe.

Vocational Career Exploration Platforms like Transfr Trek are changing how workforce development works. This technology allows job seekers to virtually step into a "day in the life" of skilled trades, from aviation maintenance to electrical construction, before committing to a degree program. By simulating the actual work environment, candidates can assess their aptitude and interest early, effectively democratizing access to careers and reducing churn in workforce training.

Experiential Learning transforms abstract concepts into spatial, interactive experiences. Newton's Room is a mixed reality physics education experience where users apply Newton's laws of physics to solve challenging puzzles that adapt and respond to their physical environment. Built on Meta Quest's Presence Platform, the interactive, problem-solving design encourages active learning and fosters a deep understanding of Newtonian physics, turning equations into intuitive spatial reasoning as virtual objects interact with real desks, walls, and floors.

Corporate Training

Enterprises use MR to accelerate skill development and practice high-stakes scenarios safely.

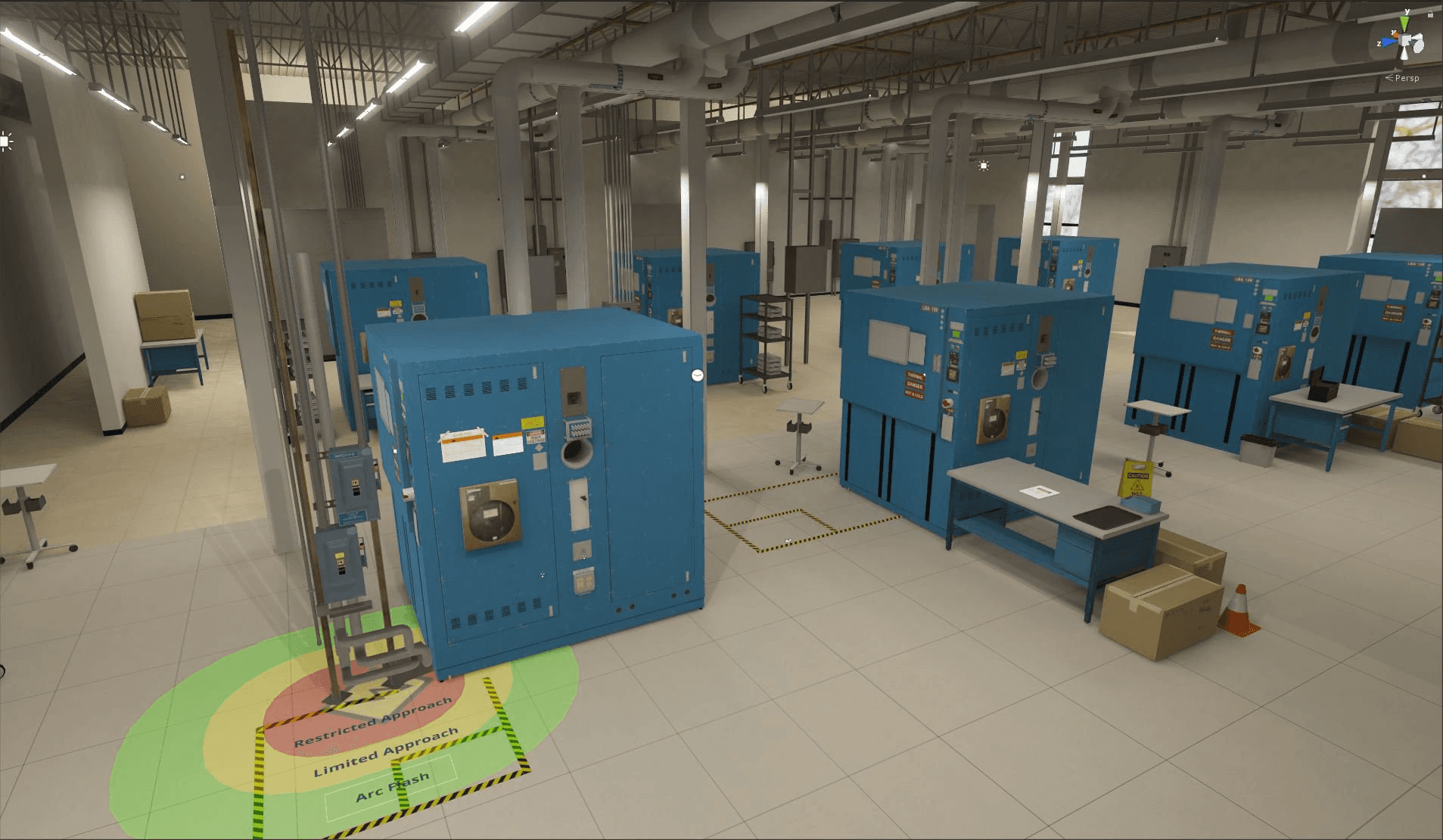

Safety Training places employees in realistic hazardous situations without actual risk. After Intel experienced 24 electrical incidents between 2015-2017, costing over one million dollars, they created a virtual Electrical Safety Recertification course. The program achieved an estimated 300% ROI over five years, and 94% of trainees requested more VR courses.

Soft Skills Development uses avatar interactions to practice difficult conversations. Within the VR environment, trainees have the sensation of feeling and thinking like another person, building genuine empathy rather than just memorizing scripts. Bank of America found 97% of employees feel confident applying what they learned in VR, and is now scaling the program to 200,000 workers.

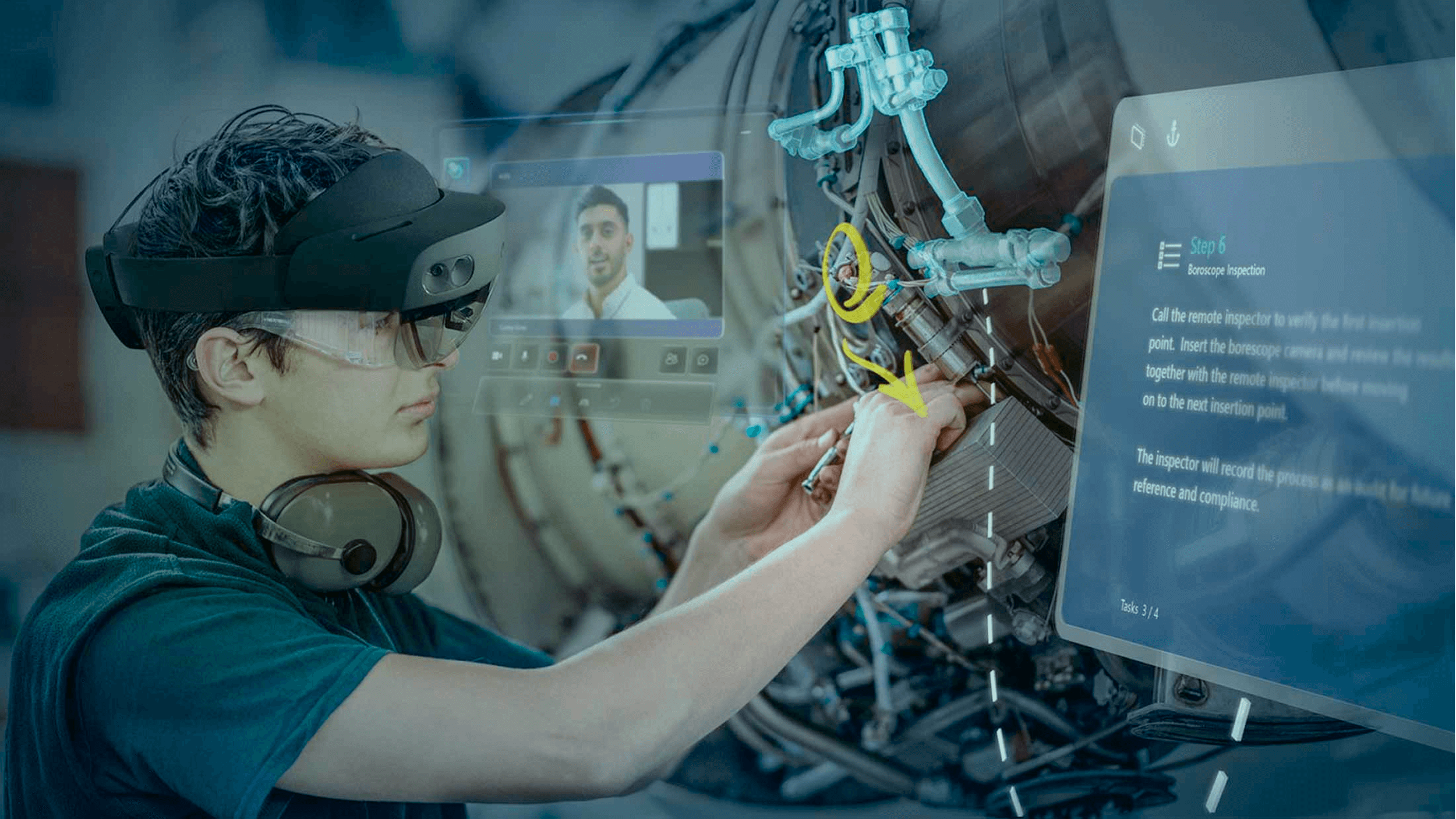

Remote Expert Assistance

Real-Time Collaboration eliminates travel for complex repairs. Unilever faced a sobering reality: 330 years of collective experience would walk out the door as one European factory's workforce retired. They worked with ScopeAR so junior technicians could collaborate with experts remotely, with annotations mapped directly onto equipment. Downtime dropped 50%, delivering ROI of 1,717% on the initial investment.

DHL reported 15% productivity gains from AR glasses in logistics operations that compound across thousands of workers and millions of packages.

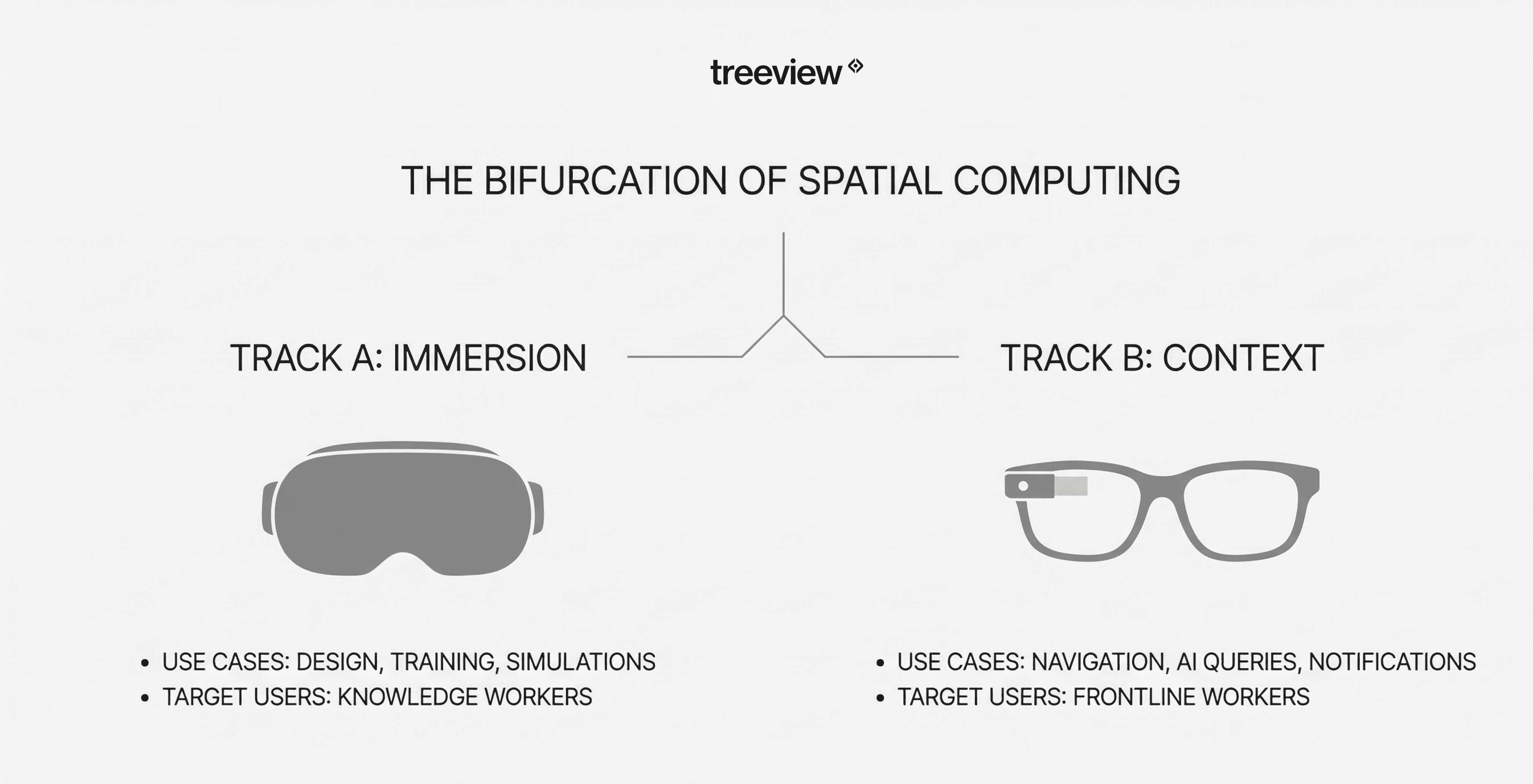

The Bifurcation of Spatial Computing: Headsets vs Smart Glasses

A strategic shift is underway in spatial computing hardware. Both Apple and Meta have recognized that a single device cannot serve all use cases optimally. The market is bifurcating into two distinct hardware tracks:

Track A: Immersion Devices

High-fidelity headsets like the Vision Pro and Quest 3 target scenarios requiring deep immersion: 3D design visualization, immersive training simulations, and spatial computing workflows where physics and occlusion accuracy matter. These devices aim to replace monitors and laptops for focused work sessions.

Track B: Context Devices

Lightweight smart glasses like Ray-Ban Meta target "contextual AI" use cases: look-and-ask queries, navigation, quick notifications, and capturing memories. These devices prioritize social acceptability and all-day wearability over visual fidelity. They aim to reduce smartphone dependence rather than replace computers.

Ray-Ban Meta glasses sales tripled in the first half of 2025, with 2 million units sold since launch. Global smart glasses shipments jumped 110% in H1 2025, with Meta capturing approximately 70% of the market. The "Glasshole" stigma that plagued Google Glass has faded as these devices now look indistinguishable from regular eyewear.

Enterprise Implication: For frontline workers requiring hands-free communication and quick information access, smart glasses are often superior because they are safety-compliant, do not block peripheral vision, and can be worn throughout a shift. For knowledge workers requiring extensive digital workspace, headsets provide the infinite canvas.

The Destination: Unified Hardware

While the market is currently split, the ultimate end goal is a unified form factor: a device as lightweight and socially acceptable as a normal pair of glasses, yet capable of full-fidelity immersion. Meta’s Orion prototype serves as the industry’s "North Star" for this destination.

Unlike current smart glasses which are limited to 2D information, Orion utilizes holographic displays and a neural interface (wrist-based EMG) to overlay interactive 3D content onto the physical world without the bulk or isolation of a headset. By bridging the gap between "Track A" immersion and "Track B" wearability, Orion demonstrates a future where users no longer have to choose between a powerful digital workspace and being present in the physical world.

Data Security and Enterprise Deployment

MR devices generate sensitive data including spatial maps of facilities, biometric data, user gaze patterns, and real-time video feeds. Enterprise deployment requires addressing several security considerations:

Spatial Data Sovereignty: Where is the 3D mesh of your facility stored and processed? Some vendors offer on-premises deployment options for sensitive environments.

Biometric Data Security: This represents the most sensitive category of data. MR devices capture physiological markers, including iris signatures, eye movement, and behavioral responses, in far greater depth than standard 2D technology. Because this data can reveal psychological states and intent, enterprise governance must strictly control how it is collected, ensuring on-device processing and preventing unauthorized profiling.

Identity and Access: Integration with enterprise identity providers (Azure AD, Okta) enables single sign-on and consistent access policies.

Device Management: MDM platforms like Microsoft Intune and ArborXR enable centralized policy enforcement, app deployment, and remote wipe capabilities.

Meta Horizon Managed Solutions for Work provides enterprise device management including shared device modes that eliminate the need for personal Meta accounts. HoloLens 2 integrates natively with Microsoft's security infrastructure, including Azure Active Directory, Intune, and Conditional Access policies.

Leaders in Mixed Reality Development

Selecting a Mixed Reality development partner requires matching their engineering capabilities to your specific use case. The MR development industry spans from creative agencies to enterprise-focused engineering firms, each optimized for different project types and industries.

Most enterprise applications are built on two primary real-time 3D engines: Unity and Unreal Engine. Selecting a partner with deep expertise in these specific engines is crucial for ensuring your application can handle high-fidelity physics and complex data integrations.

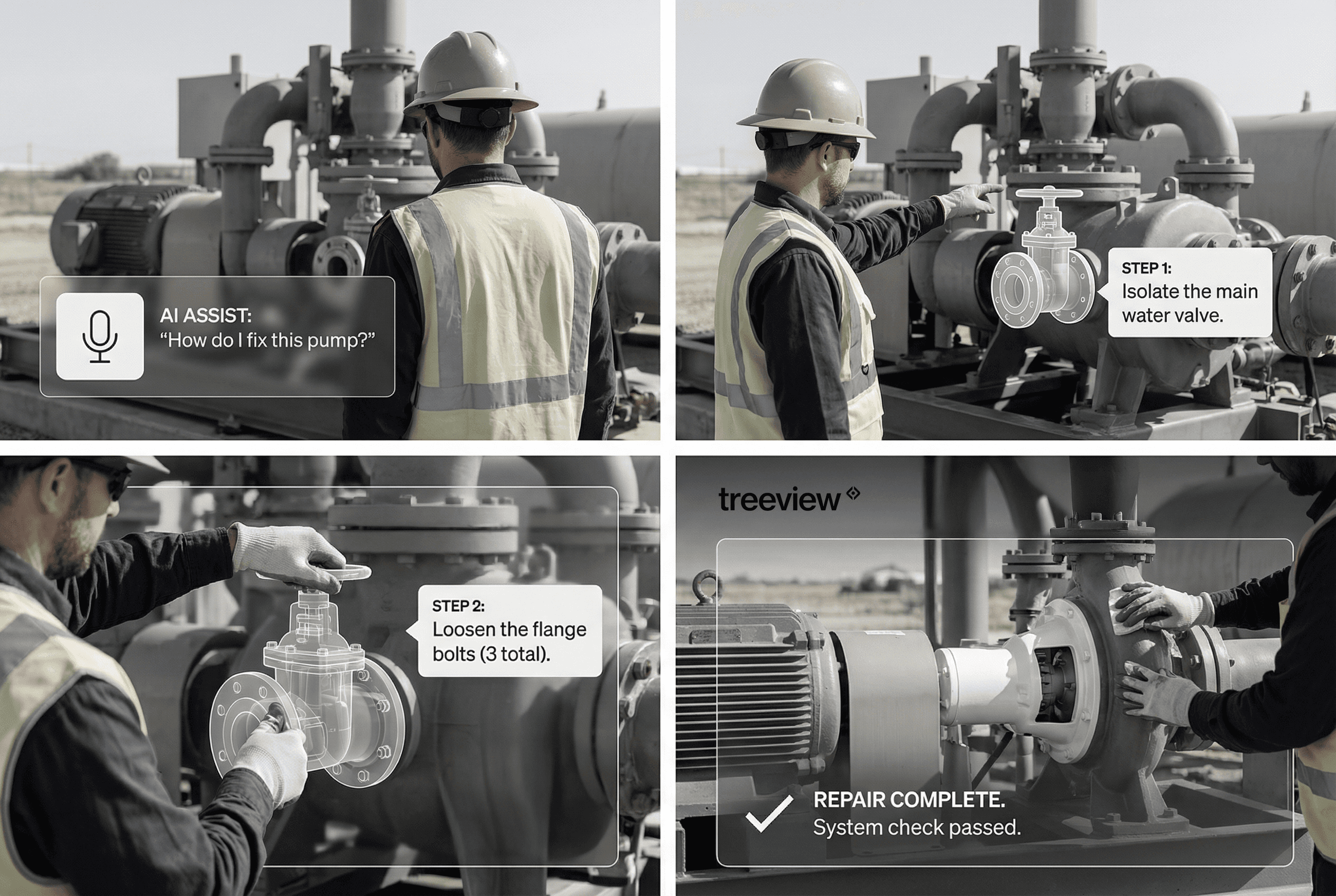

Treeview

Treeview is an XR studio that builds Mixed Reality software for the world's most innovative enterprises. Since 2016, Treeview has partnered to deliver world-class XR apps for the world's most high-impact organizations. The company is driven by a vision to help shape the next technological revolution and believes spatial computing will become the ultimate bridge between humanity and technology.

Bit Space

Bit Space is a creative technology studio specializing in accessible VR and MR experiences for education and cultural institutions. With a strong portfolio spanning schools, museums, and non-profits, Bit Space focuses on making immersive technology approachable for diverse audiences without requiring technical expertise from end users.

Sensorama

Sensorama is a creative lab that develops experimental XR prototypes and visual installations. Their approach prioritizes artistic vision and innovative interaction design, making them well-suited for brands and artists seeking boundary-pushing experiences that emphasize emotional impact and creative expression.

EON Reality

EON Reality provides enterprise XR platforms that enable organizations to build and maintain their own immersive content. Rather than offering custom development, EON Reality's DIY toolset approach serves large enterprises with internal technical teams who want control over content creation and iteration at scale.

CitrusBits

CitrusBits is a mobile development agency with deep expertise in consumer-facing AR applications. Their focus on polished smartphone experiences and app store deployment makes them ideal for brands launching AR-enabled product experiences and retailers implementing virtual try-on features.

MR Development Studios - Quick Comparison Guide

Company | Primary Focus & Model | Best Use Case | Notable Clients | Key Strength |

|---|---|---|---|---|

Treeview | End-to-End Custom Dev (Project-based / Strategic Partner) | High-impact enterprise applications requiring complex engineering & long-term support. | Microsoft, Medtronic, Meta, Daiichi Sankyo, NEOM | Enterprise Reliability: Combines startup speed with senior engineering; guarantees full IP ownership transfer to client. |

Bit Space | Education & Training (Project-based) | Workforce development, technical trade simulations, and cultural preservation. | PCL Construction, Manitoba Institute of Trades & Technology, Georgian College | Accessibility: Specialized in making complex technical training (e.g., welding, mining) accessible to non-technical users. |

Sensorama | Creative Production (Studio / Production House) | Marketing activations, 360° video tours, and artistic brand experiences. | Lenovo, Volkswagen, DTEK, Tina Karol, ABB | Artistic Vision: Strong focus on high-fidelity visuals, 360° video production, and emotional narrative design. |

EON Reality | SaaS Platform (Subscription / License) | Large-scale knowledge transfer where internal teams create their own content. | Governments (e.g., Saudi Arabia), Educational Institutions, Energy Sectors | Scalability: "No-code" DIY platform allows organizations to deploy standard content to thousands of users quickly. |

CitrusBits | Mobile Innovation (Agency / Staff Augmentation) | Consumer-facing mobile AR (e.g., product packaging, retail apps) & healthcare interfaces. | Zoetis, DuPont, Burger King, Quiksilver | Mobile Polish: Deep expertise in mobile app ecosystems (iOS/Android) ensures seamless consumer store deployment. |

For a more comprehensive ranking of development partners, we invite you to explore our detailed guides: Top Mixed Reality Development Studios and Top Spatial Computing Development Companies.

The Future of MR: 2026-2030 Roadmap

2026 Trends in Mixed Reality

As MR matures from experimental to mainstream adoption, seven key trends will define the year ahead:

Smart Glasses go Mainstream: Following the breakout success of Meta’s Ray-Ban glasses, the focus is shifting from bulky headsets to stylish, lightweight eyewear. Expect new "context-first" devices from Snapchat and Android XR that look like normal glasses but deliver real-time digital info.

AI as the Engine of MR: AI is unlocking capabilities beyond simple voice commands. In 2026, on-device AI will power real-time object recognition, precise hand tracking, and generative content, turning MR into a truly context-aware interface.

Enterprise Adoption Accelerates: While consumers explore games, businesses are finding deep ROI. Industries like healthcare, mining, and manufacturing are scaling MR for hazard training and remote collaboration to cut costs and improve safety.

Next-Gen Displays: The race is on for lighter, clearer visuals. Innovations in MicroLED and waveguide optics from Apple, Meta, and Magic Leap are finally reducing device size while delivering hyper-realistic clarity.

Specialized XR Chips: Repurposed phone chips are over. Dedicated silicon (like Apple’s R1 and Snapdragon XR) is now standard, enabling low-latency tracking and longer battery life without the thermal issues of the past.

The "Free-to-Play" Gaming Boom: Social, free-to-play titles are driving mass adoption among Gen Z. Hits like Gorilla Tag (10M+ players, $100M revenue) and Animal Company prove that social connection trumps graphical fidelity in the fight for users.

Immersive Video: Led by Apple TV+ and visionaries like James Cameron, video is moving from "watching" to "experiencing." 180° and 360° formats are setting new standards for storytelling, placing the viewer directly inside the narrative.

Near-Term: 2026-2027

Apple: Vision Pro 2 on the horizon, with the M5 Chip already launched. Apple Smart Glasses (Project N50) targeting 2027, focused on AI + Audio + Cameras rather than full AR display.

Meta: Ray-Ban Meta Display launched September 2025 with micro-LED display at $799. Orion consumer AR glasses targeting 2027 with holographic overlay, though expected at premium pricing. Meta Quest Pro expected to launch towards the end of 2026.

Samsung/Google: Galaxy XR headset launched 2025 competing with Vision Pro. Android XR smart glasses expected 2026.

Anduril: The EagleEye headset, unveiled in October 2025, represents a massive leap in military XR. Developed in partnership with Meta, Qualcomm, and Gentex, it integrates Anduril's Lattice AI software directly into a ruggedized mixed reality system. Unlike consumer devices, it is designed as a "digital teammate" for battlefield command, featuring integrated night vision, threat detection, and drone control.

The "iPhone Moment" for MR

Industry observers suggest we are in the "Blackberry phase" of smart glasses: functional but not yet transformative. The evolution mirrors mobile phones from bricks (1990s) to Blackberry (2000s) to iPhone (2007). The consensus timeline places true AR glasses achieving smartphone replacement around 2030-2035.

The industry has arguably not yet found its "killer app." Unlike the Personal Computer, which found universal utility in productivity suites (Word, Excel), or Mobile, which exploded through universal communication and social media, Mixed Reality currently lacks a ubiquitous software equivalent.

Currently, the ecosystem is primarily defined by single use-case applications. While this specificity currently limits mass consumer adoption, it creates immediate, high-impact value for the enterprise sector. Organizations are successfully deploying these focused tools to address specific, complex industry challenges, delivering ROI through the depth of the solution rather than the breadth of the application.

Wrapping Up

Mixed Reality is moving from an experimental technology to a proven tool for production deployment. The market fundamentals are strong, with enterprise adoption reaching 60% of the industry’s revenue by 2030, and documented ROI across multiple industries.

The technology will continue evolving rapidly through 2030. Early adoption positions organizations to build institutional knowledge, develop internal expertise, and influence platform roadmaps through partnership with vendors. The alternative, waiting for technology "maturity," risks falling behind competitors who are capturing productivity gains today.

We recommend starting with a Proof of Concept (POC) Strategy that targets a single, measurable KPI. A successful POC provides the data needed to secure stakeholder buy-in for a full-scale deployment.

If you need a world-class MR development team to build your next idea, Treeview is here to help. As a specialized mixed reality development studio, we partner with enterprises to build MR software that drives business impact.

For additional questions, check out our FAQ or reach out.

Frequently Asked Questions (FAQs) About Mixed Reality

Q1. Is Mixed Reality the same as Spatial Computing?

No. Spatial Computing is the umbrella term encompassing all technologies that blend physical and digital space, including VR, AR, and MR. Mixed Reality is the specific subset of Spatial Computing where digital content understands and responds to your physical environment in real time.

Q2. How much does a custom Mixed Reality application cost?

Industry standards fall into three tiers:

Proof of Concept / MVP: $50,000 to $100,000

Production Pilot: $100,000 to $300,000

Enterprise Scale: $300,000 and above

These figures assume a professional XR development partner. Consumer-grade template apps cost less but rarely survive enterprise security and compliance requirements.

Successful Mixed Reality investments rely less on development fees and more on selecting a partner with a proven track record in this complex medium. Organizations should prioritize teams that balance enterprise-grade quality with agile execution, ensuring the product can adapt quickly to market needs. Crucially, look for agreements that guarantee full IP ownership to avoid vendor lock-in and foster a long-term strategic partnership, where the focus extends beyond the initial launch to the continuous evolution of the solution.

Q3. What is the difference between VR, AR, and MR?

Virtual Reality (VR): The real world is blocked entirely. You exist in a fully synthetic environment.

Augmented Reality (AR): Digital content overlays the real world but doesn't interact with it.

Mixed Reality (MR): Digital content understands your physical space, virtual objects occlude behind real furniture and remain spatially anchored as you move.

If your use case requires virtual content to "know" where the floor is or hide behind a real wall, you need MR.

Q4. Can Mixed Reality be used without a headset?

Technically, yes, mobile devices running ARKit or ARCore deliver limited spatial awareness. However, production MR's defining characteristics (hands-free operation, peripheral vision, persistent spatial anchoring) require a head-mounted display. Mobile AR works for consumer marketing and proof-of-concept demos, but field technicians and surgeons need their hands free.

Q5. Does Mixed Reality require an internet connection?

It depends. Devices like Quest 3 and HoloLens 2 process everything locally, no connection required. Cloud rendering enables lighter hardware but requires 5G with sub-20ms latency. Most enterprise deployments operate standalone with periodic cloud sync for content updates.

Q6. Is Mixed Reality safe for industrial environments?

Yes, with hardware distinctions. Optical See-Through devices like HoloLens 2 Industrial Edition are certified for hazardous environments with zero real-world latency. Video Passthrough devices introduce ~12ms delay, not yet certified for safety-critical applications where split-second hazard perception is required.

Q7. What are the main barriers to Mixed Reality adoption?

Three primary obstacles: Data Silos (MR requires integration with ERP, PLM, CRM systems), Hardware Comfort (current headsets remain too heavy for all-day wear), and Change Management (training, process redesign, and cultural acceptance). Technology procurement is easy; organizational adoption is where projects stall. A common technical hurdle is Interoperability. Your 3D assets must be optimized for different platforms (e.g., USDZ for Apple, glTF for generic web/Android). Ensuring your data pipeline supports these standards is essential for preventing data silos.

Q8. Will Apple Vision Pro replace HoloLens 2 in enterprise?

Yes, Apple is actively driving this transition. The Vision Pro Enterprise team is focusing heavily on the commercial sector. Consequently, we are seeing a direct replacement of legacy HoloLens deployments with Apple’s hardware, as enterprises migrate workflows to leverage the Vision Pro’s superior visual fidelity, computing power, and ecosystem momentum.

Q9. How does Mixed Reality reduce training costs?

Three mechanisms: Faster retention (10-15% better test scores, 30-40% less training time), Eliminated travel (remote expert assistance without physical presence), and Risk-free practice (unlimited repetition without equipment downtime or safety exposure). A 2025 Forrester study documented up to 90% training cost reduction versus classroom methods.

Q10. Does Mixed Reality cause motion sickness?

It used to, but it has now been resolved with the new generation of headsets. Motion sickness results from latency between head movement and display update. The long-term use comfort threshold is approximately 12 milliseconds, which the Vision Pro achieves, while many devices operate at 35ms or higher. Optical See-Through devices like HoloLens 2 have zero real-world latency, making them the safest choice for sensitive users.